Babylon React Native: Bringing 3D and XR to React Native Applications

Posts from early 2019 and early 2020 already started telling the story of Babylon Native and hinted at integration with React Native. In this post, I will provide an overview of the progress that has been made on this integration as well as the next steps.

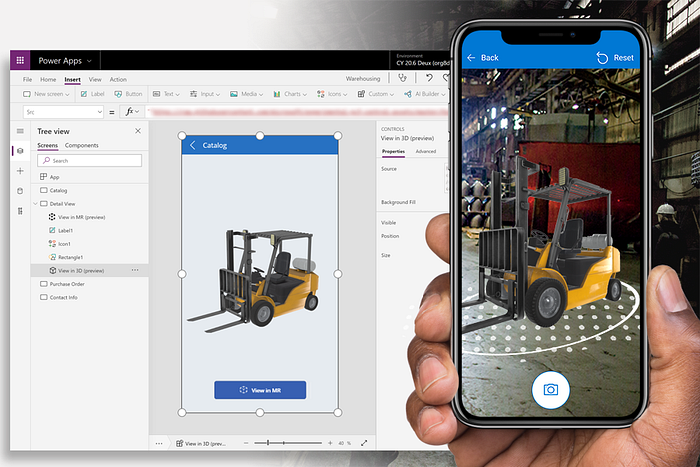

My name is Ryan Tremblay, and while I’m not on the Babylon team, we’ve had a great partnership in standing up Babylon React Native. About a year ago, a group within Microsoft began work on Mixed Reality features for Power Apps. After surveying the current landscape of AR development on iOS and Android, we decided to accelerate our time to market by having two separate native implementations built on top of SceneKit (iOS) and SceneForm (Android). This allowed us to start getting feedback quickly, but we knew this short term solution wouldn’t scale. We wanted to bring our existing features to additional platforms (including web browsers), and we wanted to continue to add more features.

When considering our options for a longer term solution, it quickly became apparent that Babylon.js and Babylon Native were a great match for these goals. Since Power Apps Mobile is already based on React Native, integration with this framework was critical for us, as was supporting the XR features of Babylon.js natively on iOS and Android. To help accelerate this work, my colleague Alex Tran and I jumped in and got to work with lots of help from the Babylon team along the way.

What is Babylon React Native?

Babylon React Native is an integration layer on top of Babylon Native that combines the power of Babylon.js and React Native. The big benefits of bringing React Native into the picture are:

- Provides a simple solution for combining 3D rendering with platform native screen space UI via platform agnostic code.

- Provides a mature developer toolset that does a lot of the heavy lifting of hosting a JavaScript engine, including developer features like fast refresh and debugging support.

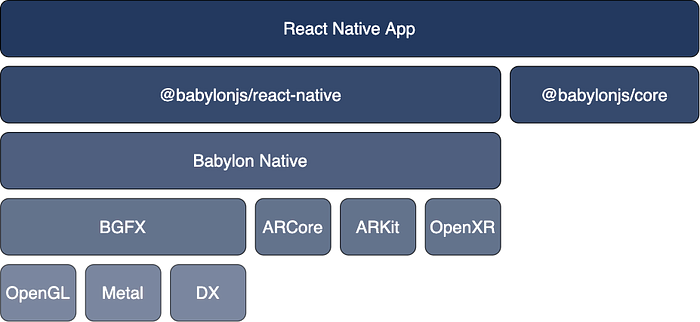

Some great architectural changes were made to Babylon Native to support working with an existing JavaScript engine instance (rather than managing one directly), and this has definitely better positioned it for future integrations with additional frameworks. The tech stack looks roughly like this:

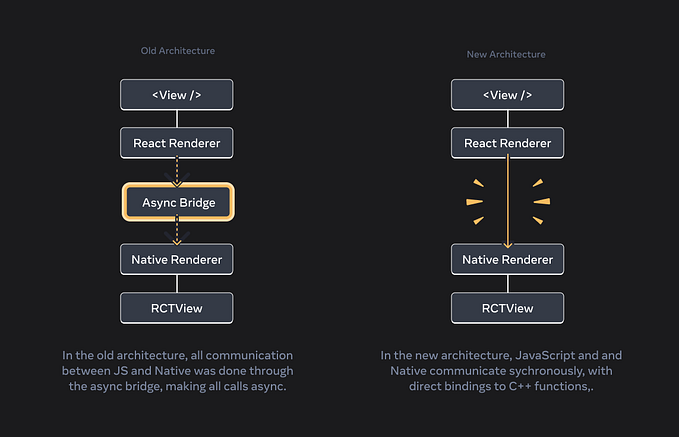

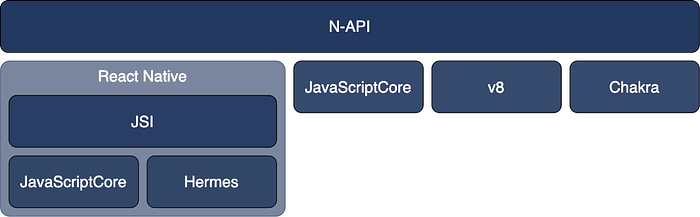

There is a lot going on in the box labeled @babylonjs/react-native, but one of the most important concepts is the communication between the JavaScript app code and the C++ framework code. Babylon Native relies on N-API (Node API) for fast, synchronous communication between C++ and JavaScript. N-API has implementations for JavaScriptCore, v8, and Chakra for broad JavaScript engine compatibility.

React Native provides its own mechanism for synchronous communication between C++ and JavaScript called JSI (JavaScript Interface) that is conceptually very similar to N-API. Since React Native manages the JavaScript engine, the way these things are wired together is by providing a N-API implementation on top of JSI that is leveraged when Babylon Native is used inside React Native.

This has a couple notable implications:

- JavaScript engine support — all JavaScript engines supported by JSI are supported by Babylon React Native. This includes the React Native specific Hermes JavaScript engine. Note however that at this time, the JSI-based implementation of N-API is not fully complete, and some functionality might not work yet.

- Debugging — any React Native module using JSI is not compatible with React Native’s remote debugging. Remote debugging works by running the JavaScript code on the device where the debugger is running (e.g. the desktop computer) rather than the device where the React Native app is running (e.g. the mobile device). This really only works for relatively slow, asynchronous communication between the C++ and JavaScript code (not sufficient for real time 3D rendering!). Fortunately Hermes implements the Chromium Inspect API, which means when using Hermes, app code can run directly on the device and be debugged from a separate (desktop) computer (often referred to as direct debugging).

How is Babylon React Native used?

An alpha version of Babylon React Native can be installed into a React Native application today via the NPM package. There are a few additional configuration steps required, so if you want to try this out, be sure to take a quick look through the README.

From an API perspective, there are really only two integration points with React Native.

The first is the useEngine custom React hook, which manages the lifetime of a Babylon Native engine instance. Since Babylon React Native relies on a custom hook, it currently assumes the use of React function components rather than class components.

The second is the EngineView React component, which is bound to a Babylon camera and presents a scene.

A simple example of using these two constructs together would look like this:

Of course EngineView can be combined with React Native UI components.

What about XR (augmented/mixed/virtual reality)?

I mentioned earlier in this post that XR support was important for Power Apps Mixed Reality features. While not tied to the React Native integration, XR support for iOS (via ARKit) and Android (via ARCore) was added as part of the same overall effort. Since Babylon Native already had experimental support for HoloLens (via OpenXR), much of the required XR infrastructure was already in place.

From a usage standpoint, app code can simply use the existing Babylon.js XR related APIs. In a browser, these are backed by WebXR. In a native application, these are backed by ARKit/ARCore/OpenXR (depending on the platform). If in the future an OpenXR implementation on top of ARKit and/or ARCore becomes available, we expect we could leverage it directly and reduce the amount of iOS/Android specific XR support code.

WebXR, ARKit, ARCore, and OpenXR all have a common set of XR features, many of which are already supported across the board in Babylon.js and Babylon Native, including plane detection, hit testing, and anchors. Other features are only supported by a subset of platforms, such as direct access to the visual tracking feature points. In these cases, it is possible to query at runtime whether the feature is supported. We plan to continue to follow this pattern and add additional XR features in the future such as lighting estimation.

Check out the video below created by Alex Tran for a quick demo of the Babylon Native XR features, as well as the benefits of combining Babylon Native with React Native such as fast refresh and easily being able to combine platform native UI with Babylon’s 3D rendering.

What’s next?

Much of the functionality to support both React Native integration and XR (iOS and Android) has been implemented and is available today in the published NPM package. Various stability, functionality, and performance improvements are planned over the coming months.

Support for Windows and HoloLens is still in early stages of development and is ongoing.

We have done some prototyping around providing the same integration points (useEngine and EngineView) for ReactJS and hope to dive deeper into this to ensure we have a consistent programming model any time Babylon is combined with a React variant.

We also have some aspirations around declarative scene definitions using JSX (similar to https://github.com/brianzinn/react-babylonjs) as React Native has some great fast refresh optimizations when only JSX is changed, but no concrete plans here yet.

What else is needed for folks to adopt Babylon React Native? What other XR features are needed? I’d love to hear thoughts from the rest of the community on this!

Resources

To learn more about Babylon Native and the integration with React Native, check out these resources.